There are numerous methods of capturing printed output automatically.

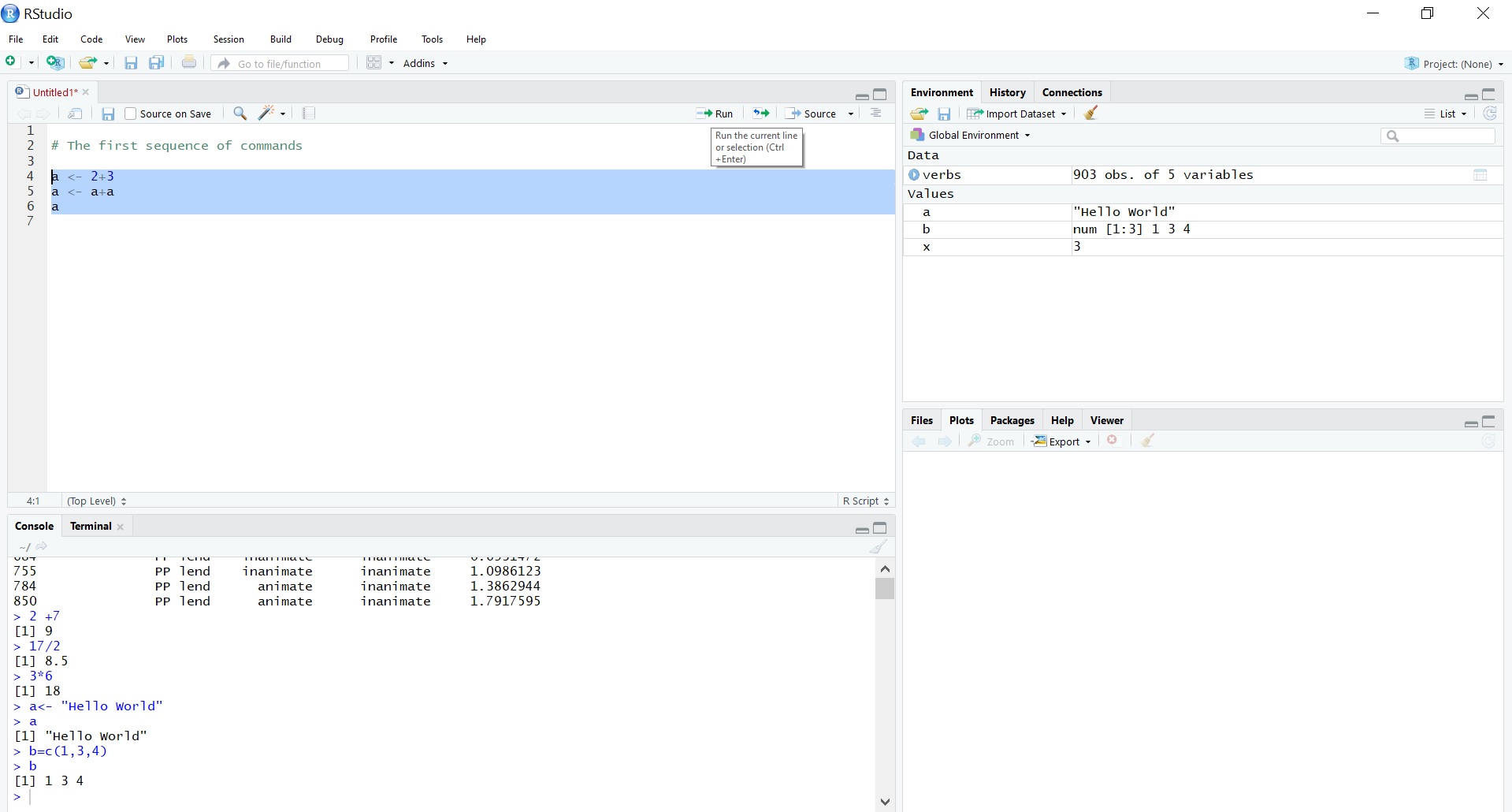

Some cases call for saving all of our output, such as creating log files. In most cases, you should be intentional about how you save output, such as saving datasets as RDS files and regression results as formatted tables with the stargazer package. However, the Console only buffers a limited amount of output (1000 lines by default), making it difficult to work with large quantities of output. When working in the RStudio, R echoes commands, prints output, and returns error messages all in one place: the Console. 10.1 What packages are already installed?.

In addition to the arguments in coef, the primary argument is newx, a matrix of new values for x at which predictions are desired. Users can make predictions from the fitted glmnet object. Notice that with exact = TRUE we have to supply by named argument any data that was used in creating the original fit, in this case x and y. Linear interpolation is usually accurate enough if there are no special requirements. (For brevity we only show the non-zero coefficients.) We see from the above that 0.5 is not in the sequence and that hence there are some small differences in coefficient values. The left and right columns show the coefficients for exact = TRUE and exact = FALSE respectively. The internal parameters governing the stopping criteria can be changed. From the last few lines of the output, we see the fraction of deviance does not change much and therefore the computation ends before the all 20 models are fit. \min_\) or the fraction of explained deviance reaches \(0.999\).

#R studio commands regression how to#

It fits linear, logistic and multinomial, poisson, and Cox regression models. The algorithm is extremely fast, and can exploit sparsity in the input matrix x. The regularization path is computed for the lasso or elastic net penalty at a grid of values (on the log scale) for the regularization parameter lambda. Glmnet is a package that fits generalized linear and similar models via penalized maximum likelihood.

0 kommentar(er)

0 kommentar(er)